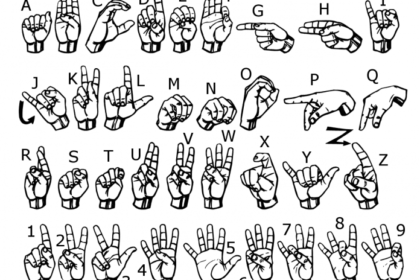

How to build Sign Language Recognition Using CNN and OpenCV?

The blog provides a step-by-step guide on building a sign language detection model using convolutional neural networks (CNN). It uses the MNIST dataset comprising images of the American Sign Language alphabets, implements the model using keras and OpenCV, and runs on Google Colab. The system captures images from a webcam, predicts the alphabet, and achieves a 94% accuracy.

4 Comments